Large Language Models for Formal Theorem Proving

Introduction

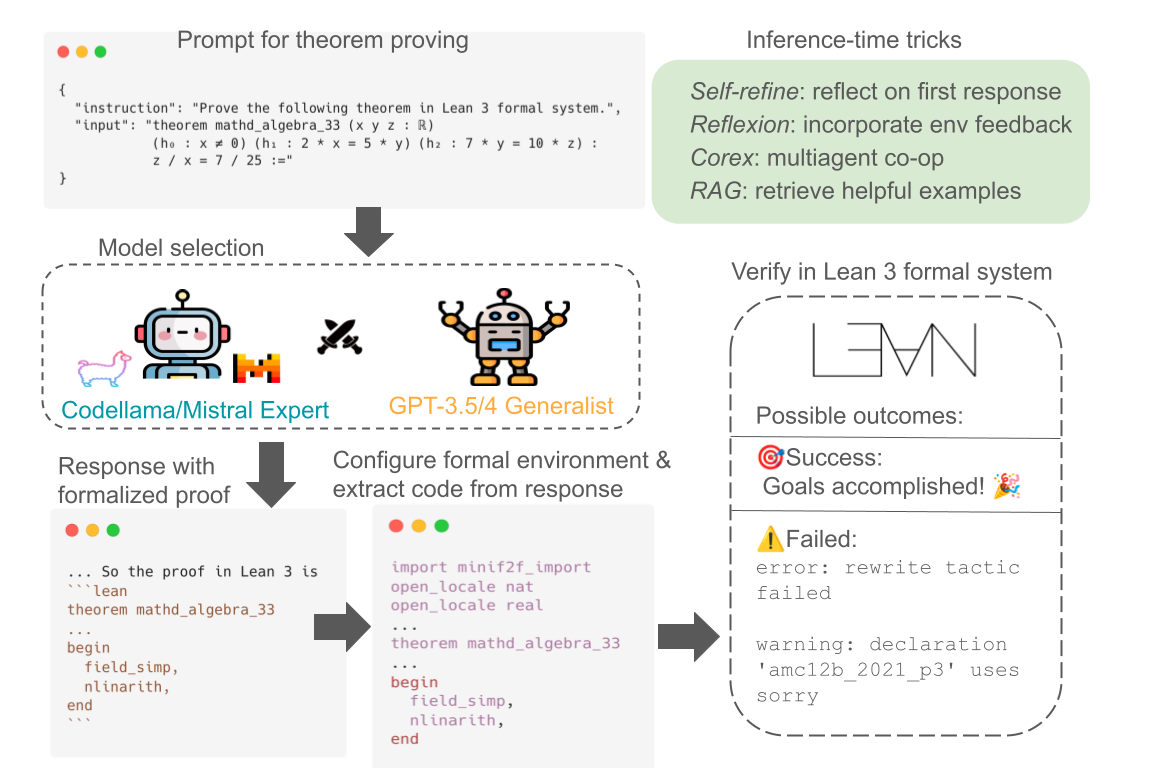

In recent advancements within the realm of deep learning (DL), substantial progress has been witnessed, particularly in the development and deployment of large language models (LLMs). These models have demonstrated exceptional efficacy across a spectrum of linguistic tasks, including translation, summarization, and question-answering, showcasing their profound capabilities in language comprehension and generation. However, the application of LLMs in the domain of formal theorem proving (FTP) — a discipline characterized by intricate reasoning and mathematical logic to verify the veracity of statements or to establish proofs — has been relatively underexplored. Despite the traditionally labor-intensive nature of FTP, its advancement holds immense value due to the extensive range of mathematical formalisms it encompasses and its considerable potential for real-world applications.

During the 2023-24 academic year, our investigation embarked on an expansive survey of the existing methodologies in this niche, against the backdrop of recent LLM advancements and the integration of newly-contributed datasets. This endeavor led to the development of fine-tuned expert models specifically designed for FTP tasks. Our research rigorously evaluated these models against the miniF2F benchmark, a leading standard in the field, with the intent to address two pivotal research questions: the comparative adeptness of expert versus general models in FTP, and the efficiency of different prompting techniques in enhancing model performance.

As a contribution towards fostering community engagement and application, we seamlessly integrated our expert models into the Lean 3 formal system. This integration is materialized through the development of a VS Code extension, aimed at enhancing the accessibility and utility of our models for FTP purposes.

People

Member: Xijia Tao

Contact: xjtao2333 [at] connect.hku.hk

Supervisor: Lingpeng Kong

-

Helpful Links

[Update 2024/4/27] A summary of our production results, including codebase, model weights, and more. Enjoy ~…

-

Final Report

[Update 2024/4/27] Our final report is out! Check out our results and contribution here.

-

Interim Report

[Update 2023/1/21] View the interim report here in pdf format.

-

Detailed Project Plan

[Update 2023/10/1] Download pdf file for our final year project Large Language Model for Interactive Formal…