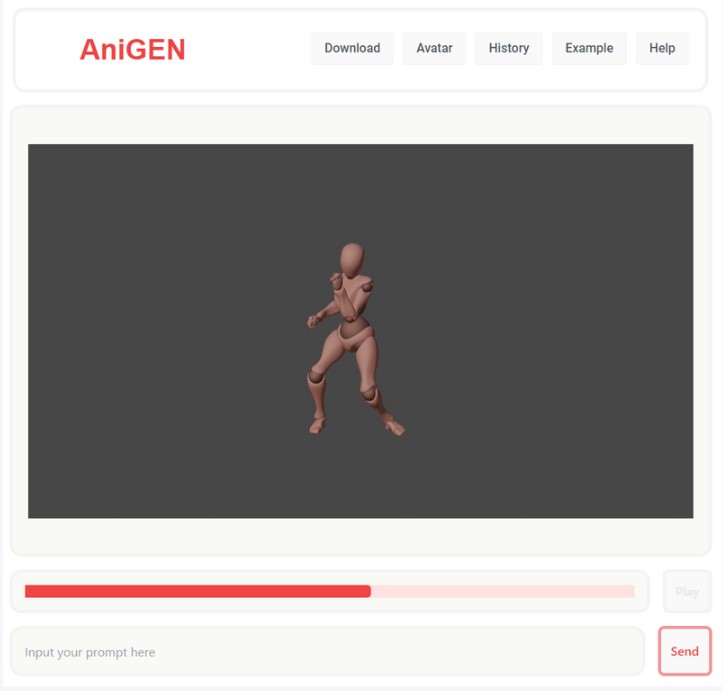

AniGEN

Natural Language-Driven AI Avatar Motion with Video-Based Motion Data

Introduction

In this project, we implemented a method to transform a text prompt into a 3D avatar animation sequence through Natural Language Processing (NLP) and blending motion data. AniGEN aims to help 3D animators and game developers produce high quality animations which are modifiable, editable, interactive and consistent, with an easy-to-use web-based interface. The user needs to provide the text input of the motion prompt, and then once the rendering is complete, the output animation will be ready as both a video and the ready-to-edit downloadable file (FBX).

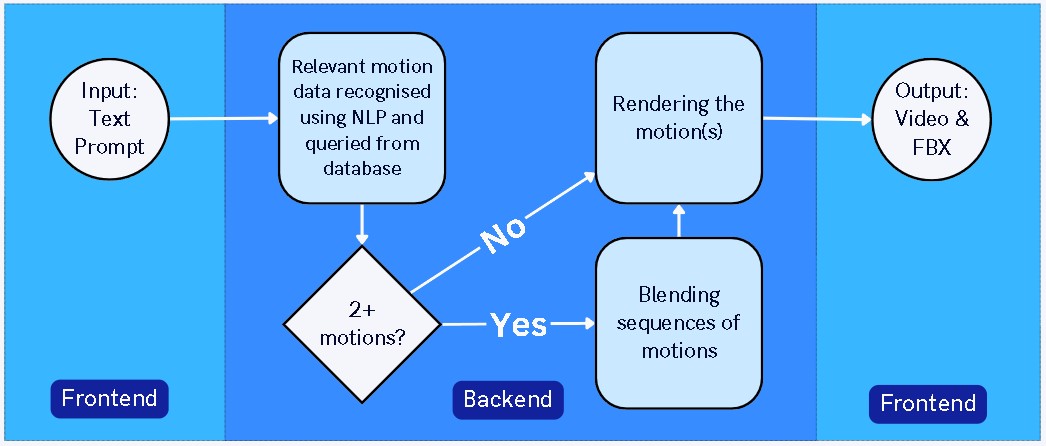

Workflow Overview

The User will first input a text prompt in the web app, for example, “walk and then run backwards”. The text prompt will then be passed from the front end to the back end, where it will be transformed into an array of motion data file names by an Natural Language Processing (NLP) model, queried from a database.

If the prompt is converted into a sequence of two or more motions, the motions will be blended into one coherent motion. The blended motion will then be rendered, and passed back to the front end as both a video and an editable FBX file.

Features

1.

Importing FBX files automatically

2.

Blending the motions automatically

3.

Rendering motions automatically

4.

Exporting the final video and editable FBX file

Tech Stack

We have developed our front-end web app with Node.JS, a JavaScript Runtime Environment, and React, a JavaScript Library. Both Node.JS and React are chosen due to the extensiveness of the library and the low difficulty involved in setup.

The web app also makes use of CSS Frameworks such as TailwindCSS and Bootstrap. In particular, TailwindCSS allows a unified CSS stylesheet for the whole web app, eliminating the need to have a style sheet for every page.

On the back end, Google Gemini is chosen as the Large Language Model (LLM) for obtaining file names from prompts using NLP. It is selected due to easy availability and access to the project team.

Google FireBase is chosen as the database in which the project team stores its motion data, due to its space and cost suitability for the project. The motion data are in turn taken from Mixamo, a motion data library from Adobe.

Blender is the software used for motion blending and rendering. In particular, its Non-Linear Animation (NLA) function is used to blend motions due to its extensive documentation as well as its status as an industry standard.

Python is one of the main scripting laguages used by the project team, and it is used for Blender and also to create the AniGEN-blender-utils and AniGEN-flask-app tools. Its easy-to-understand documentation and intuitive structure has made the language the choice for the project team.

Flask is a micro web framework written in Python, and is used by the project team to invoke shell commands from the web app, such as running a Blender process to blend and render motions, facilitating communications between the front end and the back end.

Who are we?

We are Marzukh Akib Asjad, James Olano and Mikael Lau, a trio of final-year Bachelor students at the Department of Computer Science at the University of Hong Kong.

We are glad to have Professor Taku Komura as our project supervisor, and Professor Yizhou Yu as our second examiner.

GitHub Repositories

Repository for the AniGEN web app, which serves as the user interface and the front end.

Repository for the AniGEN Flask app, which serves as the controller between the web app and the back end.

Repository for AniGEN Blender utilities, which facilitate the blending and rendering of motions in the back end.