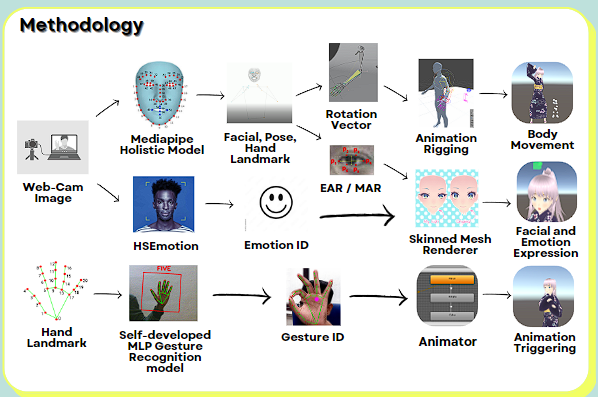

Methodology for utilizing AI on controlling virtual character

AI Models

Landmark Detection Model

Mediapipe Holistic Model estimate Pose / Face / Hand landmark data base on real-time Web-Cam Image.

Emotion Recognition Model

HSEmotion (High-Speed face Emotion recognition) estimate Emotions base on real-time Web-Cam Image.

Gesture Recognition Model

Self-developed MLP classification model estimate gestures base on hand landmark data from mediapipe holistic model.

Virtual Character Control Mechanism

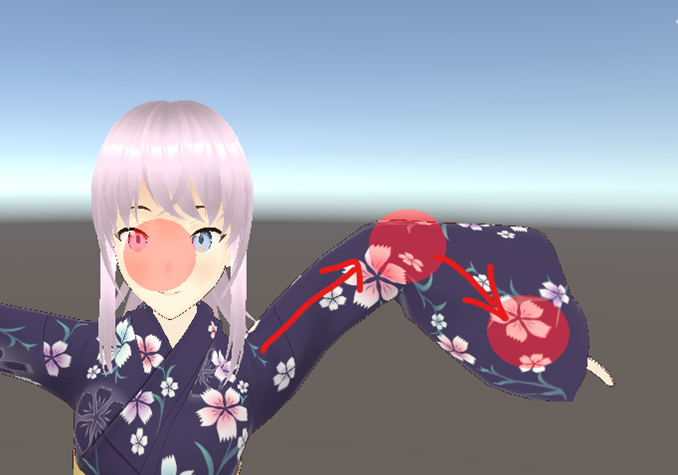

Body Movement

Calculate rotation vector, mouth aspect ratio/eye aspect ratio based on the landmark data, apply the rotation vector to control the avatar using multi-aim constraints and multi-rotation constraints of the Animation Rigging package.

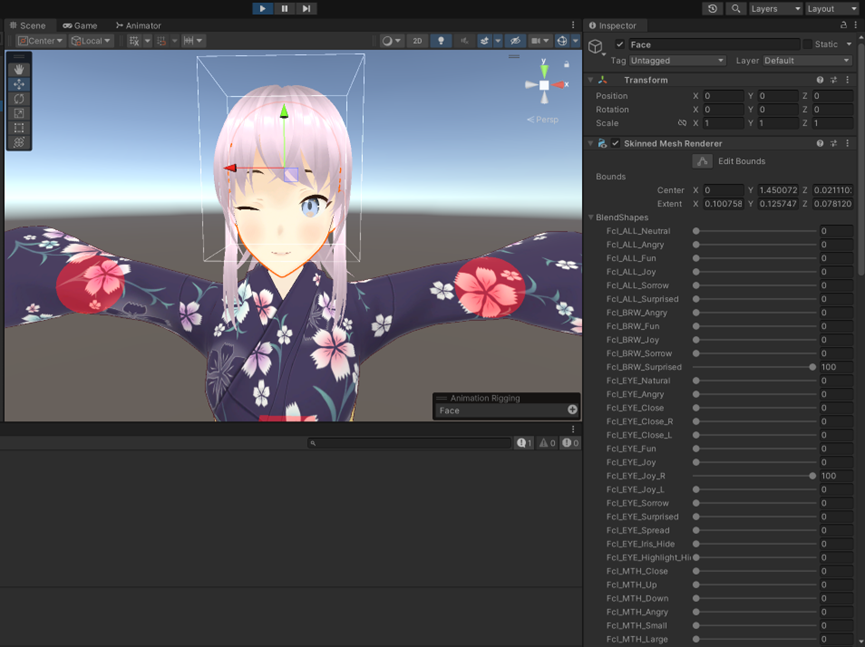

Facial and Emotion Expression

Calculate mouth aspect ratio/eye aspect ratio based on the landmark data, apply the MAR / EAR to control the degree of mouth/eye opening using the Skin Mesh Renderer, and apply detected emotion IDs on emotion weight values of Skin Mesh Renderer to control the emotion expression.

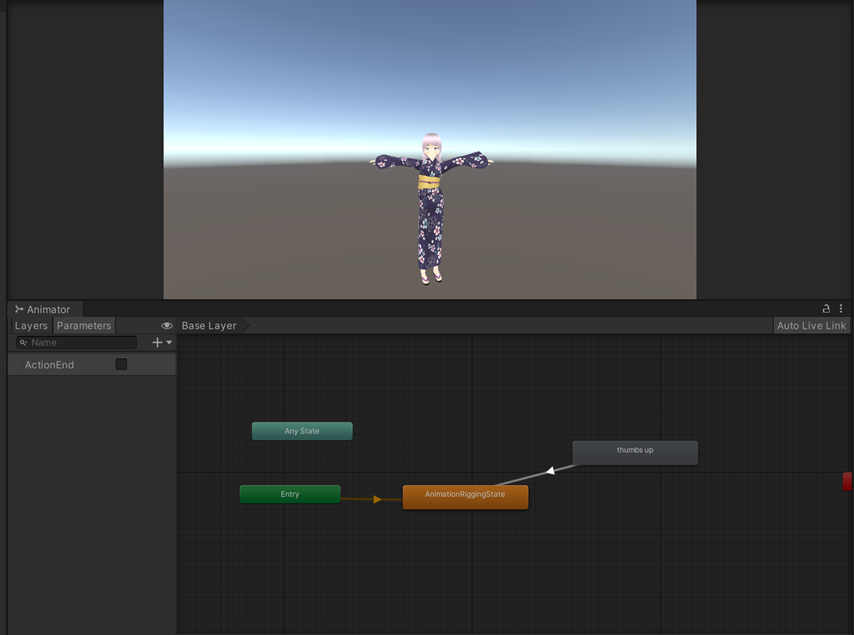

Animation Triggering

Apply the detected gesture ID to control the Animator’s transitions to trigger the avatar’s corresponding animation

Outcomes

Automatic control of 3D body movement, facial and emotion expression, and animation triggering of virtual avatar powered by combination of AI models.