ArtiFade:Learning to Invert Clean Concepts from Unclean Images

Student name: Yang Shuya

Student number: 3035832488

Collaborators: Mr. Hao, Shaozhe; Mr. Cao, Yukang

Supervisor name: Dr. Kenneth, K.Y. Wong

Introduction

Subject-driven image generation has seen significant advancements over the past two years. Existing methods can output images based on customized prompts with high subject reconstruction fidelity to the given subject. However, these methods typically require clean, high-quality input images. This prerequisite often does not align with real-world scenarios, where inputs might be polluted by watermarks or other artifacts. We introduce ArtiFade, a novel approach designed to address the challenge of generating high-quality, subject-driven images from unclean inputs. ArtiFade involves partially fine-tuning a diffusion model and simultaneously training an artifact-free embedding. Through extensive experiments, ArtiFade demonstrates remarkable results in removing both in-distribution and out-of-distribution artifacts while still preserving high subject fidelity during reconstruction. We only show high-level ideas and general experiments in the website.

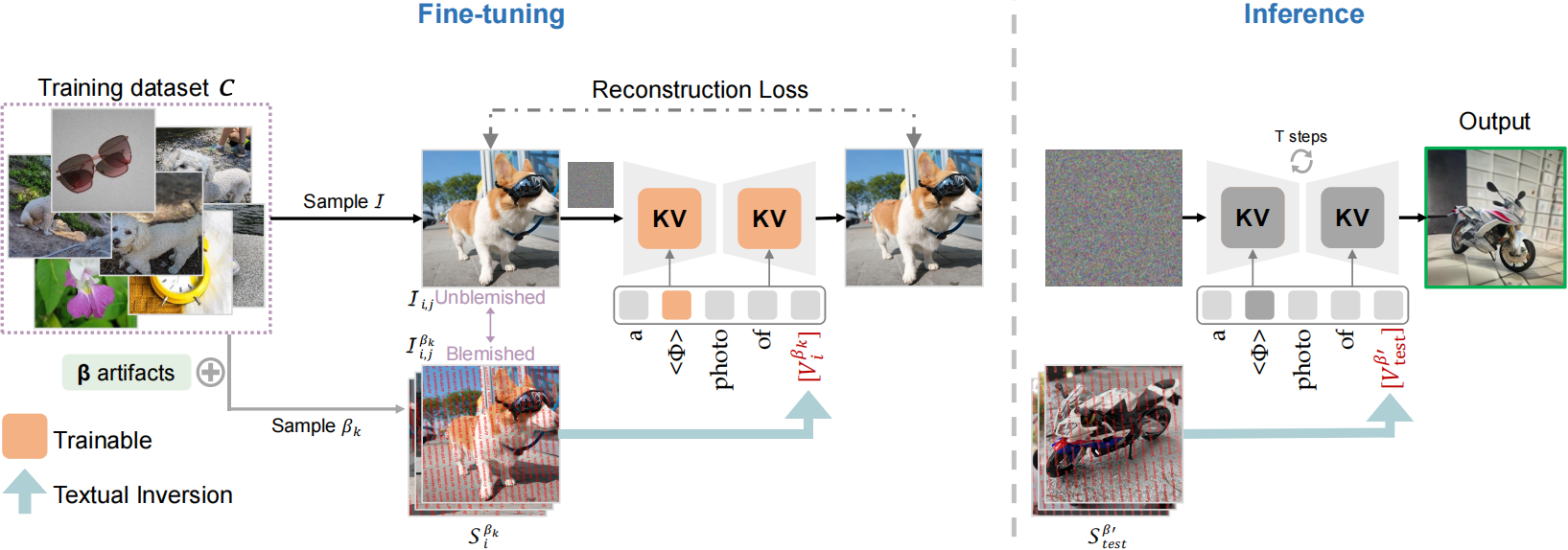

Methodology

Workflow of ArtiFade. On the left, we perform ArtiFade fine-tuning by minimizing the reconstruction loss between the clean image and the generated image with its corresponding blemished embedding. We evaluate the performance of our method by using our fine-tuned U-Net model with a new type of blemished embedding, as shown on the right.

Experiments

Evaluation metrics

(1) the fidelity of subject reconstruction (IDINO and ICLIP) – calculating similarity between clean datasets and genreated images

(2) the fidelity of text conditioning (TCLIP) – calculating similarity between text prompts and genreated images

(3) the effectiveness of mitigating the impacts of artifacts (RDINO and RCLIP) – calculating similarity between unclean datasets and genreated images

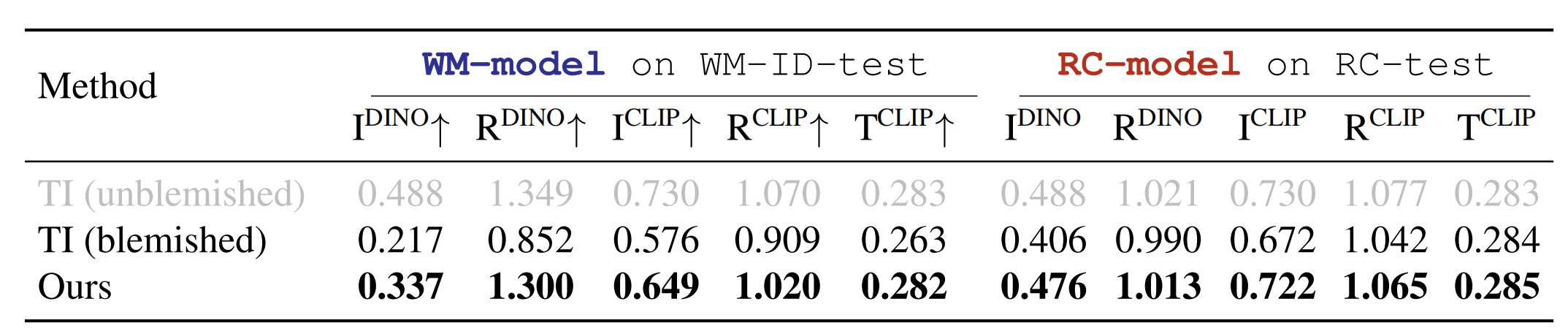

QUANTITATIVE COMPARISONS

We can observe that the use of blemished embeddings in Textual Inversion leads to comprehensive performance decline. In contrast, our method consistently achieves higher scores than Textual Inversion with blemished embeddings across the board, demonstrating the efficiency of ArtiFade in various aspects.

\

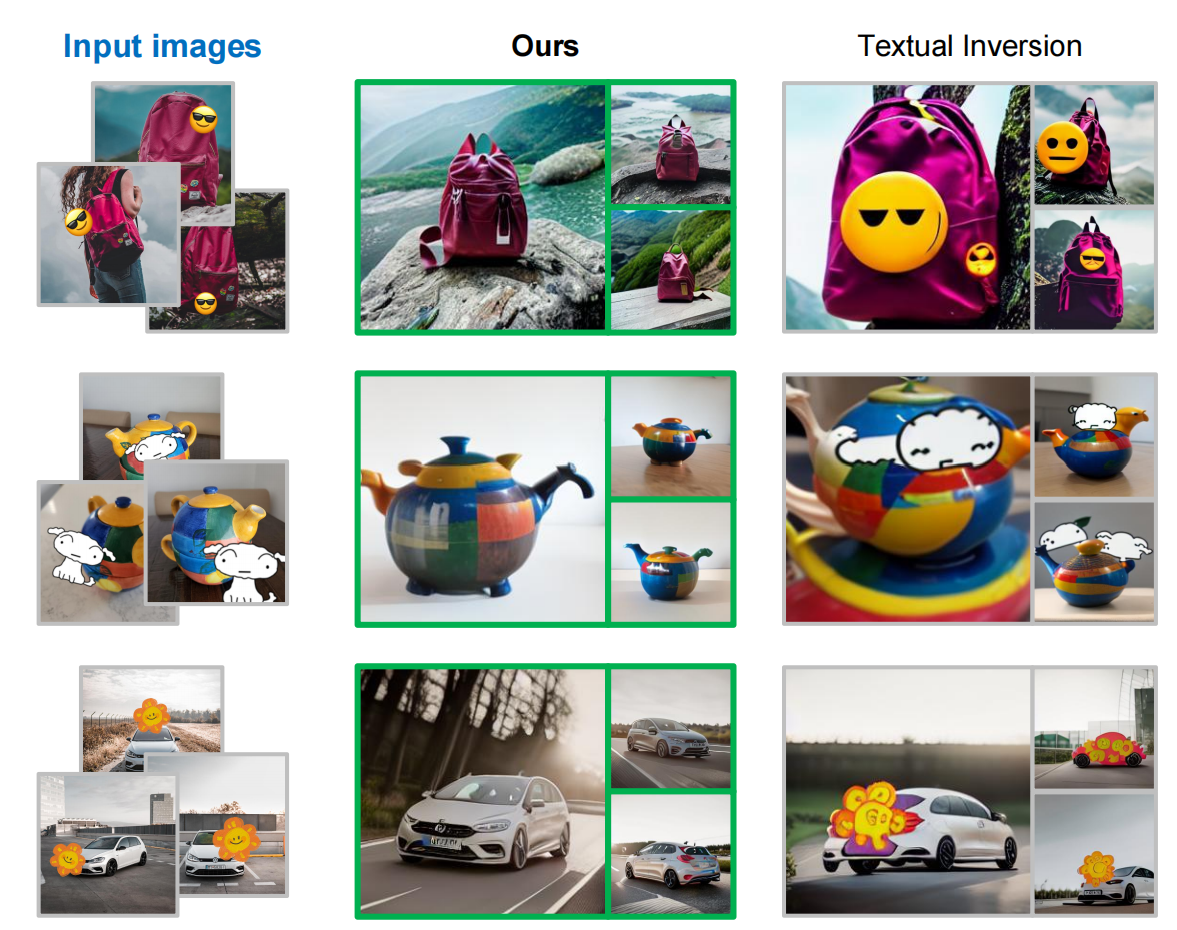

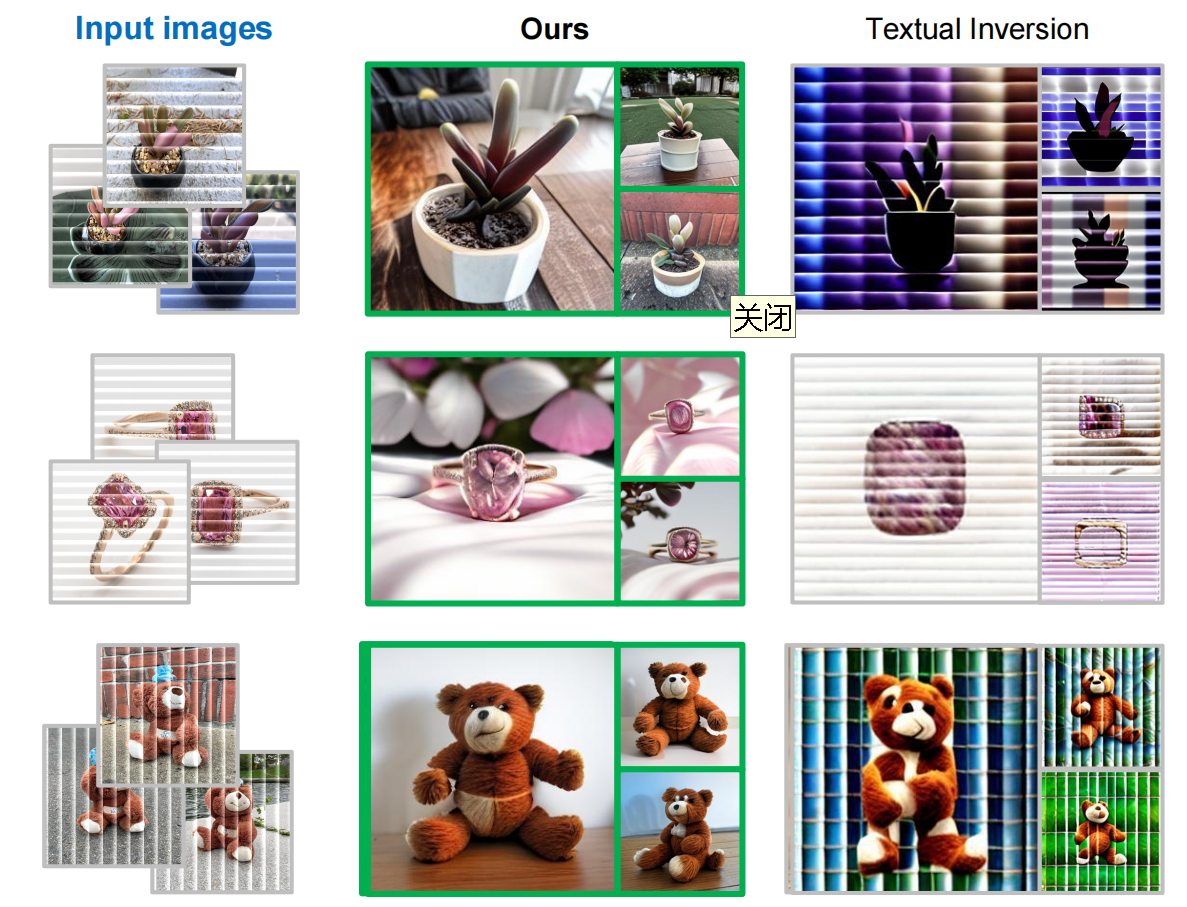

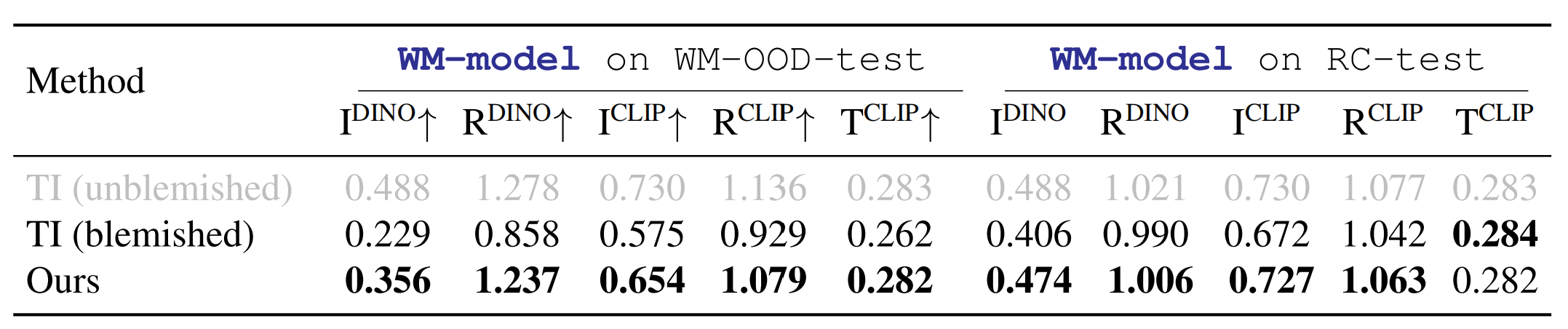

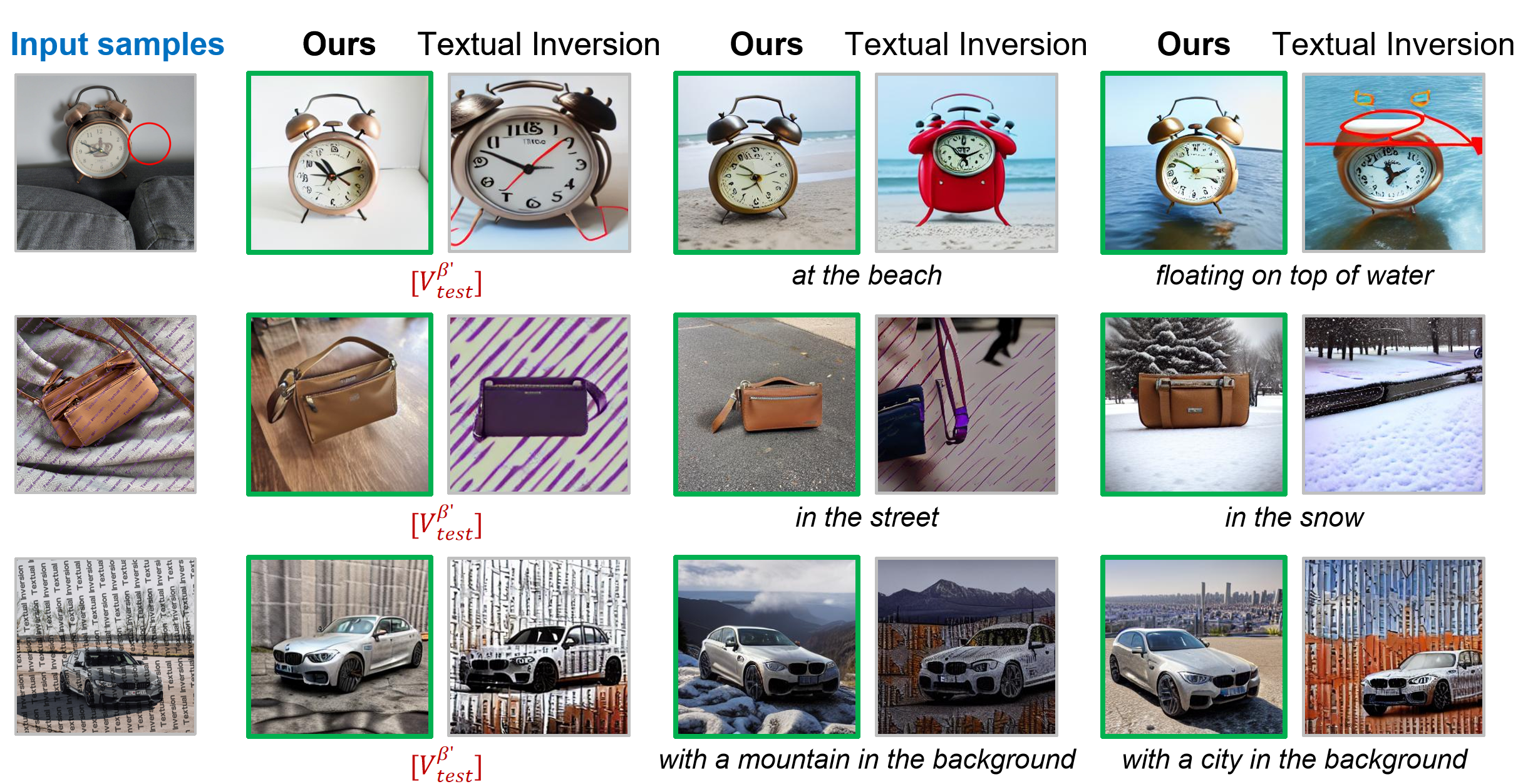

Qualitative Comparisons

In-distribution comparisons

Out-of-distribution comparisons

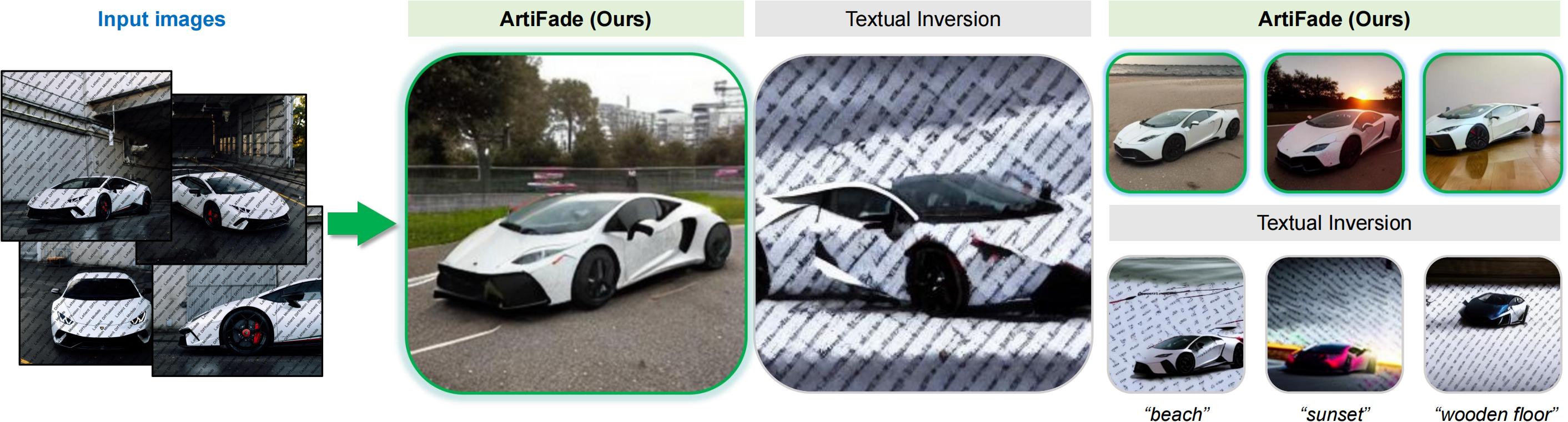

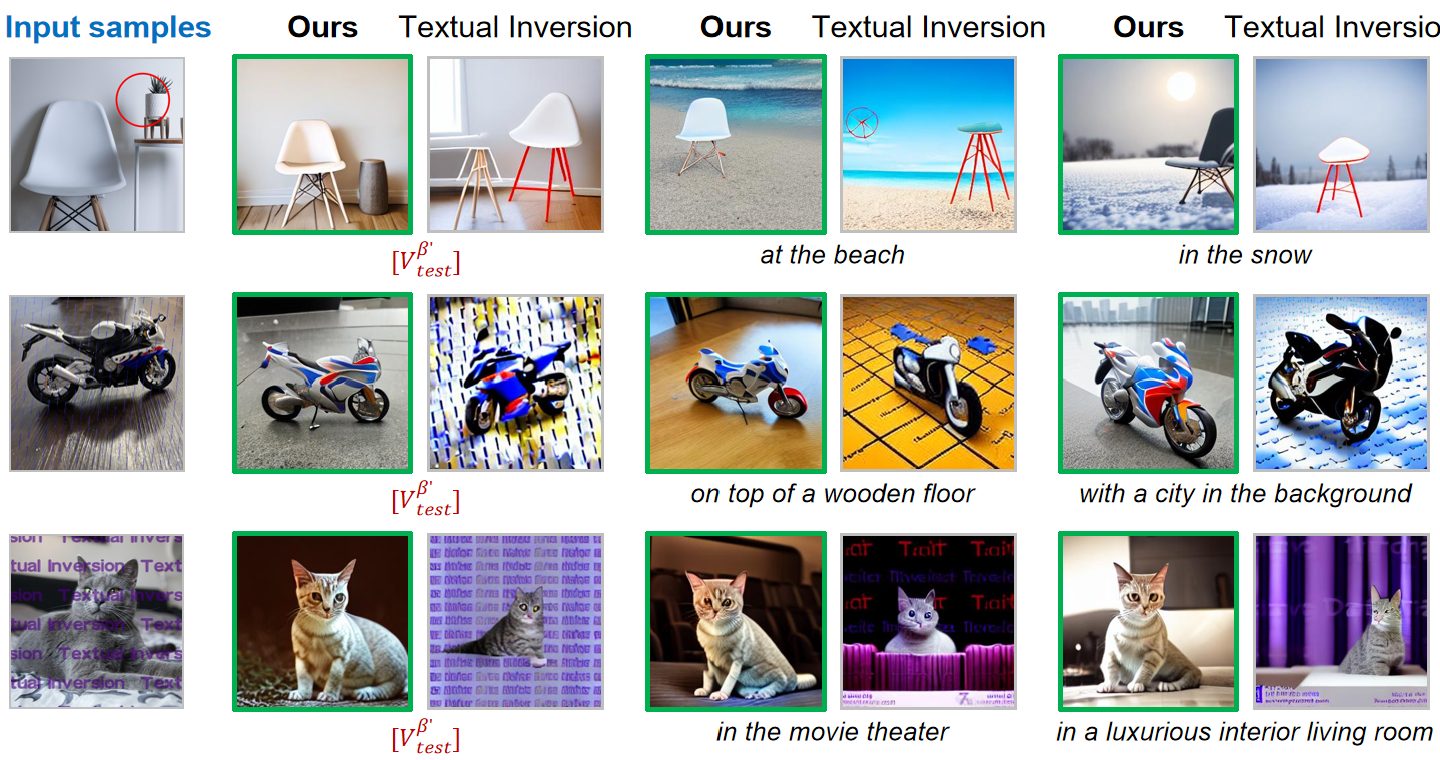

Applications

Our ArtiFade model can be applied to remove various unwanted artifacts in the input images for subject-driven image generation, e.g. stickers and glass effect.